Rearchitecting enterprise customer experience for the MCP generation

The rules of AI write themselves anew almost daily. People and brands are rushing to define what AI means and how to make it work, but a single question stands above the rest: Who controls the future of your customer and employee experiences—you, or your vendors? At Avaya, we believe the answer should be you. That’s why we are embracing the open future of AI, building the Avaya Infinity platform to support Model Context Protocol (MCP). It’s the right move—for our customers, for the market, and for the kind of trust and innovation that will define what’s next for enterprises and public sector agencies.

What is MCP?

MCP stands for Model Context Protocol, an emerging open standard that enables AI models (like GPT, Claude, or Gemini) to securely and reliably interact with external tools, data sources, APIs, and user context in a structured way. It allows AI to operate with memory, relevance, and continuity—not in isolation, rather in sync with the enterprise context, user history, and operational intent. MCP makes AI infinitely useful in high-stakes, high-scale environments.

"This is not a 'wait-and-see' moment. Avaya believes the time to be intentional about building the definitive open orchestration engine for the modern enterprise is now."

David Funck, Chief Technology Officer, Avaya

How does MCP work?

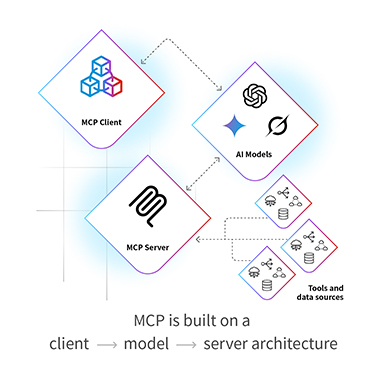

MCP is built on a client → model → server architecture:

- The Client gathers context (user info, role, task, environment).

- The Model (e.g., Claude, GPT) receives the context and a list of available tools or actions.

- The Server hosts the tools (APIs, databases, etc.) and executes any actions the model requests.

All communication follows structured schemas so everything is interpretable, traceable, and secure.

Avaya Infinity with MCP–Achieving AI-powered enterprise communications intelligence

This demonstration shows how enterprise communications supervisors or anyone from the business can not only analyze data, but also build their own dashboards and perform analyses in real time, independent of traditional interfaces.

Killing friction with AI and MCP

For too long, integrating AI into customer experience has meant choosing the lesser of two evils:

- Lock-in with a single provider’s closed ecosystem, sacrificing flexibility and future optionality

- Chaos through brittle, costly, one-off integrations across multiple tools and data silos

This false choice limits innovation and burdens IT teams. It creates fragmented experiences that erode trust—with both customers and employees. In a world that moves at the speed of AI, this approach is simply unsustainable.

Enter MCP—the open, vendor-agnostic standard changing everything. With MCP, AI models can interact seamlessly with tools, data, and operational logic—securely and at scale. A favorite metaphor for the few opining on this topic is to think of it as the USB-C for AI: one universal connector that works across models, platforms, and enterprise systems.

Created and released by Anthropic in late 2024. The rapid adoption of MCP by major technology players such as OpenAI, Google DeepMind, and Microsoft underscores its strategic importance. This alignment indicates a collective realization that proprietary integrations hinder market growth more than any advantage gained by closed ecosystems. We are currently witnessing and co-creating a foundational shift toward interoperability, transparency, and user control.

MCP enables AI systems to operate with dynamic awareness of user, session, and business context. For Avaya Infinity, this means models can consistently deliver responses that are not only accurate but also relevant, personalized, and on-brand — even across fragmented ecosystems and at enterprise scale. This level of contextual intelligence is critical for organizations managing thousands of workflows, users, and integration points across operations.

Collaboration with Databricks

As part of our commitment to secure, scalable, and open AI, we are partnering with Databricks to bring enterprise-grade governance and data privacy to the MCP implementation within the Avaya Infinity platform. This collaboration ensures that enterprise customers can confidently deploy AI tools with fine-grained access control, audit logging, and seamless integration across structured and unstructured data sources.

"Generative AI offers tremendous potential to transform customer experiences, and we are thrilled to collaborate with Avaya to help organizations quickly unify their data, simplify data and AI governance and security, and ultimately deliver AI that understands their business."

Heather Akuiyibo, VP of GTM Integration, Databricks

From fragmented to freedom with MCP

In a typical enterprise, there are 'N' number of tools and data sources (e.g., Salesforce, a product database, a knowledge base) and 'M' number of AI models or applications that need to access them. Before MCP, connecting each tool to each AI model required a unique, point-to-point integration. This created a combinatorial explosion of development work, resulting in an architecture that was expensive to build, difficult to maintain, and inherently unscalable.

MCP transforms this paradigm by introducing a standardized intermediary layer, converting the complex N x M problem into a simple and scalable "N+M" solution. Each of the 'N' tools only needs to be wrapped with a single MCP server, and each of the 'M' AI models only needs a single MCP client. Once a component can "speak" MCP, it can communicate with any other MCP-compliant component in the ecosystem. This dramatically reduces development overhead, eliminates redundant work, and eradicates the technical debt associated with maintaining dozens of fragile, custom connectors. Investments in new technologies, channels, and modalities meant to unify customer interactions often fracture them. Customers end up feeling less known, less cared for, and less connected to the businesses that serve them than ever before. For many organizations, this pain is felt most deeply in enterprise communications.

Avaya is turning this tide, unifying the fragmented enterprise communications experience. By connecting the channels, insights, technologies, and workflows that help deliver best-in-class interactions.

The potential ROI of MCP

With MCP, Avaya Infinity users will realize even deeper business value: faster resolution times, more natural conversations, stronger brand consistency, and higher satisfaction across customer and employee interactions. It unlocks a new tier of performance in generative AI, where outputs aren’t just intelligent — they’re situationally aware and strategically aligned.

Early research suggests MCP will yield dramatic improvements for enterprises, saving time and money in a number of areas:

| Before MCP | After MCP | |

| Integration | Custom connectors per model/tool | One MCP interface works across models and tools |

| Deployment time | Long, expensive due to bespoke engineering | 50–70% faster, lower costs via reuse (quiq.com, Palma AI, Humanloop, aibase.com) |

| Data access | AI blind to system data, context lost | Full real-time context: CMS, CRM, EHR, ERP |

| Risk management | Manual lookup, delayed fraud detection | Instant cross-system checks reduce fraud by 30% |

The future is open

The world’s most influential AI leaders are aligning behind MCP because they recognize that the future of AI is open and contextual, and its potential is nearly impossible to overstate. Continuing our belief in and commitment to open and intelligent orchestration, Avaya is proud to help lead the charge, bringing MCP to the world of customer experience.

Glossary

Full glossary of terms below.

| Term | Definition | Why It Matters |

| MCP (Model Context Protocol) | An open protocol that standardizes how AI models interact with tools, APIs, and memory via structured context objects. | Enables reliable, interpretable, and scalable AI behavior across workflows and enterprise systems. |

| Context | A bundle of structured information passed to a model—such as user ID, session history, roles, time, or tool availability. | Empowers models to generate relevant, personalized, and situational responses. |

| Model | The generative AI (e.g., Claude, GPT-4, Gemini) that receives the MCP context and generates responses. | The “brain” in the loop, which uses context to make smarter, more aligned decisions. |

| Client | The application or interface (e.g., chat window, agent framework, customer portal) that gathers and sends context to the model. | The user-facing entry point that initiates requests and interactions. |

| Server | The logic layer that hosts tools, APIs, functions, and data sources the model can use via MCP. | The action-execution backend, ensuring AI outputs can trigger real-world business actions. |

| Tools | Discrete functions or APIs (e.g., “search knowledge base,” “check ticket status,” “update CRM”) exposed to the model via MCP. | Allow models to go beyond language and take actions on external systems. |

| Schemas | The structured definitions (in JSON or YAML) for how tools and context should be represented and passed to models. | Create a common language between client, server, and model, improving consistency and debugging. |

| Memory | Persistent context retained across sessions (user history, preferences, past actions) provided to the model. | Enables continuity and personalization over time — like an agent that “remembers” a customer. |

| Ephemeral Context | Short-lived, session-specific information (e.g., current task, temporary variables). | Keeps AI responses focused and efficient for the current interaction only. |

| Agent | An AI system that can take actions, invoke tools, and make decisions based on MCP context — often used in autonomous or semi-autonomous workflows. | MCP provides the backbone for enabling safe, useful, and controllable agent behavior. |

| Orchestration | Coordinating multiple steps, tools, and model outputs to achieve a higher-order business goal. | MCP simplifies orchestration by giving models access to structured context and tools in one protocol. |

| Observability | The ability to inspect what context was passed, what decisions the model made, and how tools were used. | Crucial for debugging, compliance, and enterprise trust in AI systems. |

| Interoperability | The ability for different AI models and platforms to work with a shared structure of tools and context. | MCP enables plug-and-play AI across different vendors and architectures. |